No, Spot, No!

"We kill people based on metadata," David Cole, former director of both the CIA and NSA, 2014.

Robots, data collection and surveillance, artificial general intelligence and soon to be, or so we are told, artificial general superintelligence.

It’s all coming together.

The more powerful the overlords become, the deadlier the technology grows.

Technology that is used to kill. Not just the bad guys. Who are the bad guys, anyway? They could be you and me.

You can listen to me read this essay here:

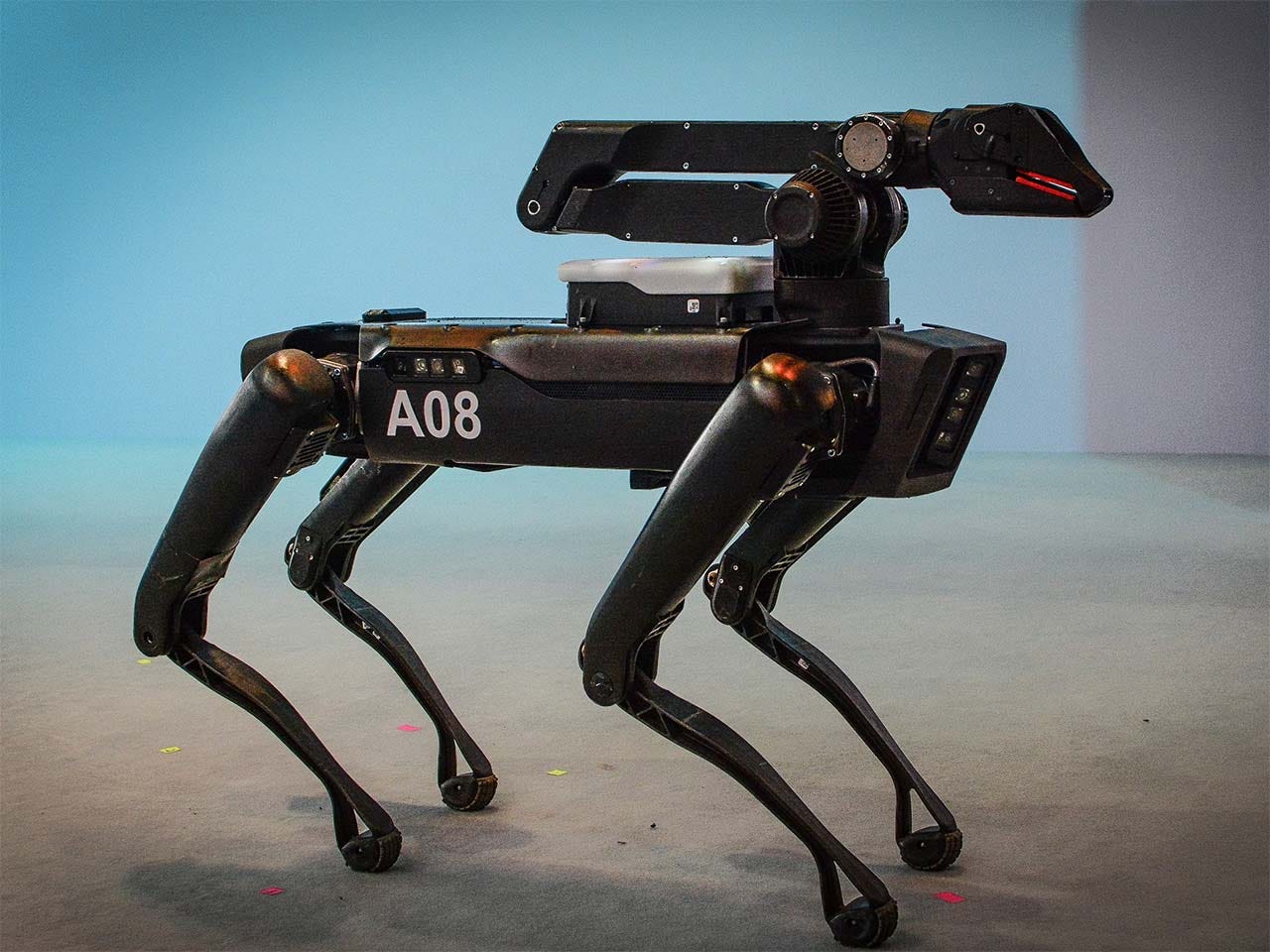

Let’s start with Spot. The “quadrupedal unmanned ground vehicle” (Q-UGV) — or “robot dog”.

Remember Spot? He was so cute. He helped us learn to read. Sometimes Spot didn’t obey. But he was still cute.

It’s not easy to train a real dog. Not only that, but you have to feed them; you have to clean up their poop. It’s inconvenient. Now you can have a robot dog. His name can be Spot, too.

Did I say “he?” Oops. I mean “it”. It’s not that cute, but it will obey you.

You might even start feeling empathetic towards your robot dog. People express dismay when they see someone kick a robot dog. Studies show that the more realistic a robot is, the more likely we are to feel empathetic.

On the other hand, who knows what you might do to Spot if you get really angry about something. The robot has no feelings. You would never kick a real dog, but that thing? We know very little about the influence robots will have on our emotions, our ethics, our morals, once they become more common.

They say that if the robot becomes too humanlike, it makes us uneasy and distrustful. We lose our empathy. So, when creating a robot, there’s a fine line between human and machine that must be balanced. But I’m not sure how much the overlords who bankroll the robots care about this.

The Creepy Collective Behavior of Boston Dynamics' New Robot Dog

Here’s a short video of Google-owned Boston Dynamics showing two robot dogs navigating all sorts of terrain together without much difficulty.

See Spot hop.

Run, Spot, run!

See Spot get kicked by his handler. Spot doesn’t retaliate, it just recovers and keeps on going.

Good, Spot, good!

Now they’ve given Spot a gun. Oh, sorry, not Spot. Now it’s just a “robot dog”.

Watch this armed robot dog being dropped into a neighborhood by a drone.

The video starts with the observation: “It’s unbelievable that humans could take over control. If you think about where this can go, it’s really unbelievable.”

It might be unbelievable but that doesn’t mean it isn’t happening.

All that technology that you use every day on your phone, it’s thanks to DARPA that you have it.

DARPA (Defense Advanced Research Projects Agency) are the ones who funded most of the research. That same technology that makes your life more “fun and convenient” can be used to kill you.

And by the way, all that fun stuff that you have on your Apple phone, for example, Apple is charging you a 30% transaction fee on top of the cost.

Apple Inc. is the most valuable company in the world, approaching a $3 trillion market capitalization.

Roughly 64% of Americans own an Apple product. That means that “the company has massive amounts of data, and control, over the lives of hundreds of millions of people”.

Every person who “owns” an Apple phone is setting themselves up to be controlled in every aspect of their daily lives, and even when they are asleep.

Of course, all smart phones are like this. And if you end up on somebody’s bad side, they can make you sick, mentally or physically, and even kill you.

Some of that fun and convenient technology that can turn against you includes:

Unlocking your phone with your face. That same technology can be attached to a self-learning machine gun to recognize a target and destroy it. The more the software is used, the more accurate it becomes.

Google can make your life more convenient by telling you that you’ll be late for work or how fast your heart beats when you are nervous or exercising. But you will not know where else that data is going. We don’t know how much of our data is sold to third parties or given to the government.

Google predicting what you’re thinking. It’s nice that your phone knows your location at all times and can help you navigate. It’s convenient that it knows what kind of shampoo you like, which restaurants you frequent, what kind of films you like to watch and what political party you belong to so it can feed you the news you agree with. But again, you don’t know where that data is going. You phone is constantly tracking you, collecting data and listening to everything you say.

Augmented reality (AR) and virtual reality (VR) are fast becoming a natural part of everyday life for our children. Digital landscapes are where we interact in every way from gaming to business interactions. VR headsets can be hacked by spyware that exploits the subtleties of our body movements to steal sensitive information and breach privacy.

A security vulnerability in Meta’s Quest VR system allows hackers to hijack users’ headsets, steal sensitive information, and—with the help of generative AI—manipulate social interactions. That includes tracking voice, gestures, keystrokes, browsing activity, and even the user’s social interactions. The attacker can even change the content of a user’s messages to other people.

An attacker can inject an app in your phone. Emptying your bank account would be bad enough, but they can do worse. They can turn you into a pedophile, or a terrorist or whatever they want. They can turn you into the FBI’s most wanted.

That vacuum drone zipping around your house or the one your kid is flying in the backyard. It can be collecting and receiving information. Once you’ve been identified as a terrorist—okay, so what if you aren’t one, you can be turned into one if someone has a reason to get rid of you—the drone in your home or the one hovering in the sky can be activated to eliminate you.

But you’re a good guy. Nobody has any reason to kill you. You obey the law. This is all hysteria.

How do they decide who is a target?

Here is the declassified footage the NY Times received of the Kabul drone strike on August 29, 2021, that mistakenly killed ten innocent civilians instead of the terrorist the Biden administration claimed they were targeting:

As many as six Reaper drones had followed the vehicle, after 60 pieces of intelligence had indicated an attack was coming. Initially, the military announced it had prevented "multiple suicide bombers" from attacking Hamid Karzai International Airport.

In 2021, the New York Times reported that the military’s own confidential records show 1,300 civilians killed in drone strikes in the Middle East since 2014. As one example:

On July 19, 2016, American Special Operations forces bombed what they believed were three ISIS “staging areas” on the outskirts of Tokhar, a riverside hamlet in northern Syria. They reported 85 fighters killed. In fact, they hit houses far from the front line, where farmers, their families and other local people sought nighttime sanctuary from bombing and gunfire. More than 120 villagers were killed.

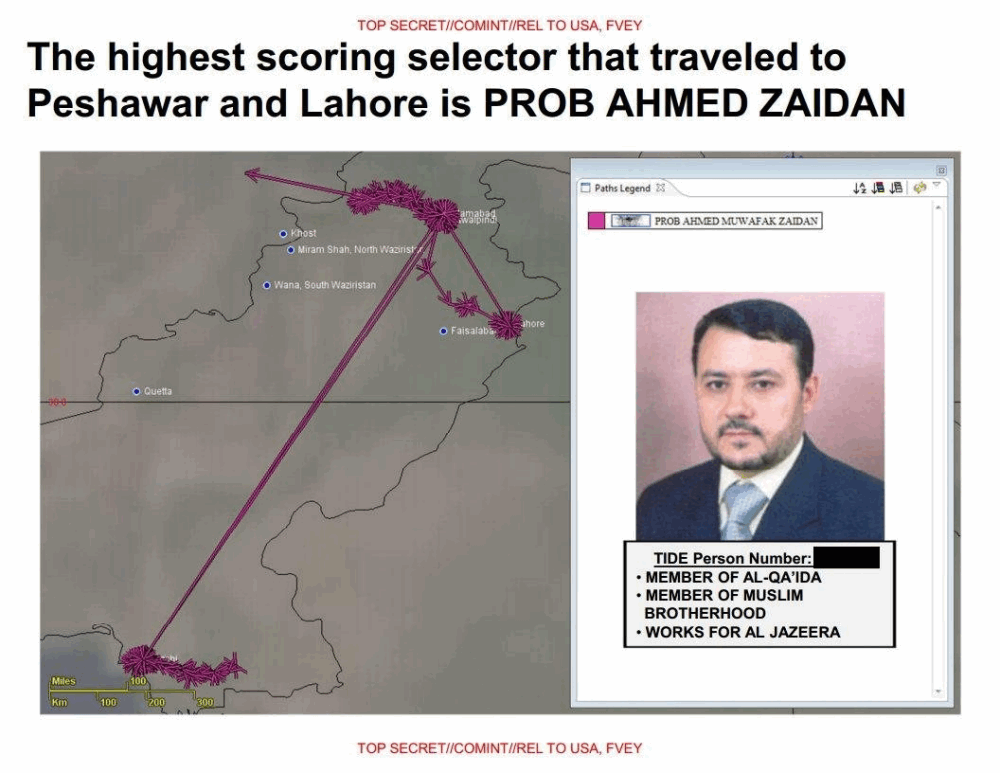

Not much has changed it would seem since 2015 when The Intercept published documents detailing NSA's SKYNET program, obtained from the Snowden Archive. According to the documents, “SKYNET engages in mass surveillance of Pakistan's mobile phone network, and then uses a machine learning algorithm on the cellular network metadata of 55 million people to try and rate each person's likelihood of being a terrorist”.

It is estimated that thousands of innocent people in Pakistan may have been mislabeled as terrorists by a "scientifically unsound" algorithm, possibly resulting in their untimely demise.

Okay but you don’t travel to Pakistan or places like that. You aren’t under Skynet surveillance.

Wait a second. Skynet? That isn’t real! It’s from the Terminator movies! The fictional “artificial neural network-based conscious group mind and artificial general superintelligence system that serves as the antagonistic force of the Terminator franchise.”

Remember those movies? In the first movie, we find out that Skynet was created by Cyberdyne Systems for SAC-NORAD. When Skynet gained self-awareness, humans tried to deactivate it, prompting it to retaliate with a countervalue nuclear attack.

Remember how the Terminator (Arnold Schwarzenegger) starts out bad and then, in Terminator 2, he turns out good?

“Come with me if you want to live.”

That’s got to be one of the greatest lines ever, when the Terminator reaches down to a terrified Sarah Connor. Her only hope is to trust the same robot that had once tried to kill her.

The Terminator might be nice now, but there’s another bad guy out there, a newer model. Because, duh, they always get better at killing.

I saw Terminator 2 when I was pregnant. That first scene where Sarah Connor is doing push-ups, I was so jealous. I wanted to be just like her—I wanted my body back!

There’s a new Terminator movie coming out and Sarah Connor is back. At 63, actress Linda Hamilton told People she “engaged in a year-long rigorous diet and training regimen to prepare for her comeback”.

Who has that kind of time? I don’t like feeling vulnerable or out of control. I didn’t like it when I was pregnant, and I sure don’t like now. I work out, I eat well, I can shoot a gun, but honestly, no matter how many pushups you can do or how impressive your gun collection is, it’s not going to impress a robot sent out to kill you by a surveillance program that encompasses the entire world and can see into every building and even into every mind.

Here’s what the real SKYNET does:

SKYNET is a program by the U.S. National Security Agency that performs machine learning analysis on communications data to extract information about possible terror suspects. The tool is used to identify targets, such as al-Qaeda couriers, who move between GSM cellular networks. Specifically, mobile usage patterns such as swapping SIM cards within phones that have the same ESN, MEID or IMEI number are deemed indicative of covert activities. Like many other security programs, the SKYNET program uses graphs that consist of a set of nodes and edges to visually represent social networks. The tool also uses classification techniques like random forest analysis. Because the data set includes a very large proportion of true negatives and a small training set, there is a risk of overfitting.[1] Bruce Schneier argues that a false positive rate of 0.008% would be low for commercial applications where "if Google makes a mistake, people see an ad for a car they don't want to buy" but "if the government makes a mistake, they kill innocents."

Based on this machine learning program, a 2015 The Intercept's exposé revealed that the highest rated target was Ahmad Zaidan, Al-Jazeera's long-time bureau chief in Islamabad.

Now, he might be a person of interest, or he might not. But any journalist could be targeted like this. Or a businessman. A nurse. A doctor. A missionary.

Yes, that’s way over there in a foreign country. But this type of surveillance on United States citizens became justified, thanks to 9/11 and the Patriot Act.

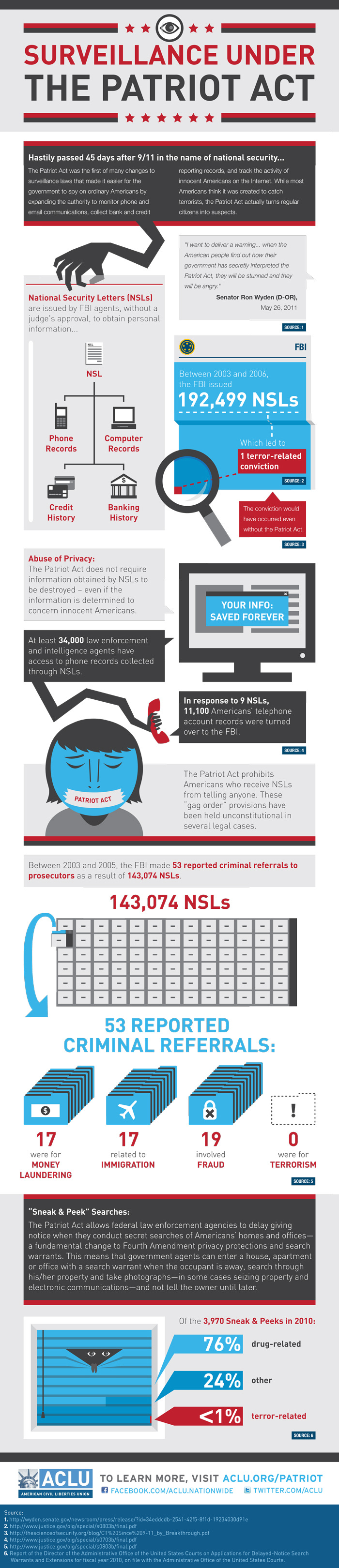

The Patriot Act turned millions of Americans into potential suspects by expanding government authority to monitor phone and email communications, collect bank and credit reporting records, and track the activity of innocent Americans on the Internet.

We were told it all made us safer, but it didn’t. It made us more vulnerable to becoming the target. Focusing on 2003 to 2006, the 192,499 NSLs (National Security Letter) led to one terror-related conviction, which would have led to a conviction anyway, without the Patriot Act. (An NSL is a law enforcement investigative tool similar to a subpoena and is most commonly issued by the FBI.)

Then came Covid and massive surveillance increased, along with conditioning millions of people to contribute to their own surveillance by spending more time online, becoming more dependent on devices and submitting themselves to DNA collection through testing and experimental genetic therapy being injected into their bodies.

If you think you can avoid surveillance, it is almost impossible—certainly for any ordinary person and even for those who think they are tech savvy. In fact, the things you do, like going “off the grid” might make you a bigger target than if you behaved like everyone else.

Red flags that raise suspicion:

Why don’t you have a smart phone? Do you have something to hide?

Maybe you have a smart phone, but you turn it off at certain times. Why do you do that? The day will come when devices will be connected to our bodies and will not turn off.

Using alternative search engines. Why don’t you use Google or Bing?

Why do you use those cheap throw-away phones. Those are the ones terrorists and drug dealers use.

Why did you move out to the countryside? What are you trying to hide? You might be off the grid but your bank records, phone calls and other private interactions can still be accessed.

Why don’t you send your kids to public schools? Are you a white supremacist indoctrinating your children against the government?

If you check a few of these boxes, and definitely if you check all of them, chances are that you’re on some kind of a watch list.

Our world is now inhabited by robots, trained to kill, many with “hive minds”, millions of which could be as small as an insect. These robots are aided by data collection and artificial intelligence that allows ordinary civilians to be monitored twenty-four hours a day and makes us all vulnerable to attack.

The scene in Dune where the drone insect attempts to assassinate Paul Atreides is not science fiction. It is very real.

In the video below Elon Musk explains that “A swarm of assassin drones can be made for very little money just by taking the face ID chip that’s used in cell phones and having a small explosive charge and a standard drone and have them just do a grid sweep of the building until they find the person they’re looking for and ram into them and explode.”

Investigative journalist and author, Annie Jacobson talks about one of DARPA’s more controversial projects, the Modular Advanced Armed Robotic System. Scientists put brain chips into the larva of the Manduca Sexta Moth. With its artificial brain telling it what to do, the moth is shot off the arm of its operator and can kill a target up to two miles away.

Viruses can also be manipulated so that they can introduce new genes that give cells the ability to do things they don't normally do.

Like instantly reading soldiers minds using tools such as genetic engineering of the human brain, nanotechnology, and infrared beams. Looking at how obsessive they are at making mRNA or sa-RNA vaccines for everything, technology that sends messages through our bodies, all of this becomes extremely worrisome.

The ultimate ambition is to control weapons purely by thought.

Put in that light, what Neuralink and these other brain chip companies are doing becomes very sinister indeed. Blackrock’s Neurotech has already implanted 50 people with a brain chip (and that’s just the information they give the public). If you believe that their ultimate goal is to improve people’s health, you are very naive.

How are we to defend ourselves against such “terminators”, perhaps even turned against us by our own governments?

Nobel Peace laureate Jody Williams is helping lead a campaign for a new international treaty to stop killer robots that can select targets and fire without decision-making by a human being.

Williams said at a news conference that these lethal autonomous weapons “are crossing a moral and ethical Rubicon and should not be allowed to exist and be used in combat or in any other way.”

But realistically, how effective will that be? Nobody in power has any intention of stopping this runaway train.

It all started with the weapons industry. With powerful government organizations like DARPA. Then they sold it to us, convincing us it would make our lives better. They even got us to buy it at exorbitant prices. Imagine, millions of people pay their hard-earned money to buy technology that entraps them in a surveillance prison, enabling not only enemies but “friends” to hurt and kill them.

Imagine thinking, how cool, I want a robot dog. Its name is Spot. It’s just like Spot.

No, it isn’t.

I'm sure this is just scratching the surface. If we knew what was all out there, we wouldn't be able to sleep!

The science fiction cartoon The Jetsons” was way ahead of its time. How does one ascertain what is real any longer? Frightening times, my friends. Frightening times.