Killer Robots, Video Games & Artificial Wombs

Fully autonomous weapons aren't just the third revolution in warfare, they're the manifestation of everything that has gone wrong with technology.

One-time or recurring donations can now be made at Ko-Fi

You can listen to me read this essay here:

This is a wild one so, buckle up and get ready!

We are the masters of artificial intelligence!

If you doubt it, check out the video below. Look how these robot dogs do the bidding of their controllers. It must be a powerful feeling, to have created all those robot dogs and watch them perform on command.

In fact, robot dogs are so obedient, the military thinks it’s a good idea to do this:

What happens when packs of robot dogs roam free after having been fed an algorithm by their masters. But who might those masters be? We worry about robots going rogue. Maybe we should worry first about who is programming them.

With drones like these becoming cheaper by the day, it could be the kid down the street. Forget about last week when loners who are bullied in school dreamed of shooting up their teachers and schoolmates, tomorrow they will be sending drones into your backyard to attack your children playing there. Or maybe flying through your window as you sleep at night and blowing you to smithereens.

Video games just got one step closer to reality. Or maybe it’s the other way around. It’s hard to tell anymore. Video games are a big part of most children’s lives and have been for years. Despite having age restrictions, most kids are so computer savvy, they can access almost anything on the internet and their parents never have a clue. The media has been conditioning children to think of violence as nothing more than a game on a screen.

Since 1996 Harvester’s plot line has an amnesiac player-character Steve Mason coerced by a mysterious organization into performing tasks that result in increasingly brutal violence.

As one of the very first horror-themed video games, Texas Chainsaw Massacre puts the player in the role of Leatherface, tasked with chasing down and murdering victims. The game has scenes such as a character's head getting split open and another where a character's scalp is removed to reveal their brain underneath.

Grand Theft Auto gamers immerse themselves in the underworld, where stealing cars (beating hookers in order to get your money back after having sex with them) and killing police officers are the way to win.

In Carmaggedon, killing all the pedestrians in a level was an alternative way to beat each race, meaning the game rewards the player for following their violent urges.

Call of Duty: Modern Warfare 2's gamers have the option to slaughter innocent civilians in an airport (in order to avoid exposing yourself as an undercover agent).

Mortal Combat has been around since 1992 and is more popular now than ever. In 2019 the franchise was listed as worth $12 billion.

According to Game Rant:

At least one Mortal Kombat 11 developer has been diagnosed with PTSD after working on the game. This person, who chose to remain anonymous, had to spend large portions of their day constantly watching violent and gory animations. This person was also surrounded by "bloody real-life research material" that the animators referred to when recreating gore in Mortal Kombat 11.

"I'd have these extremely graphic dreams, very violent," he said. "I kind of just stopped wanting to go to sleep, so I'd just keep myself awake for days at a time, to avoid sleeping."

If a developer suffers in this way, imagine what hours upon hours of playing these games does to an impressionable young mind.

Is it not obvious that the whole idea is to make violence a game. It’s something that happens on a screen. You can be the one in control. You can inflict the violence and it’s perfectly acceptable. All you need to care about is winning.

All of this has made it perfectly acceptable to create weapons that can make up their own minds to kill us.

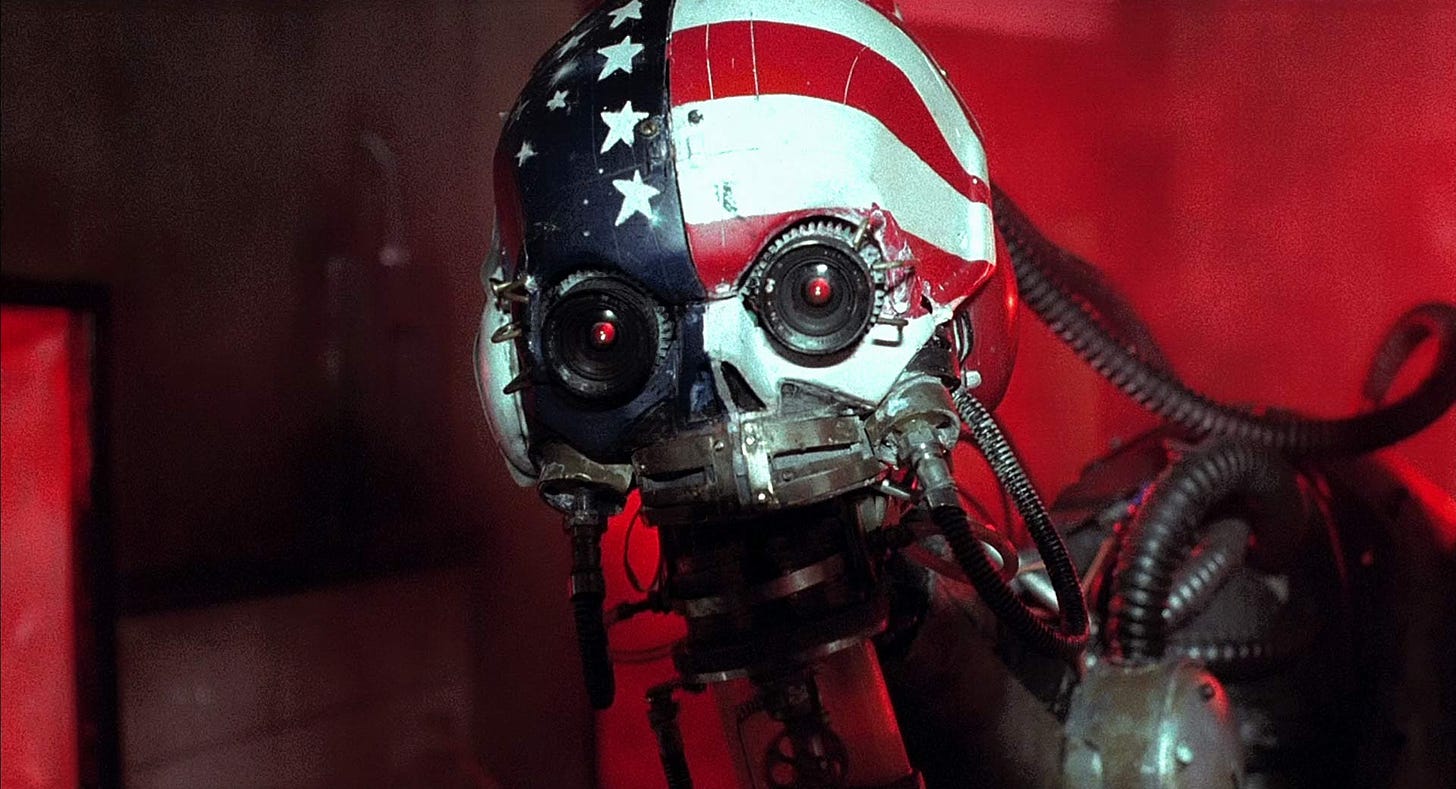

Welcome to Lethal Autonomous Weapons (LAWs)

LAWs have been described as the third revolution in warfare, after gunpowder and nuclear arms. They can select and engage targets without human intervention.

Slaughterbots sound like something from a video game, but they’re real.

Slaughterbots are weapons that “select and apply force to targets without human intervention”. They make their decisions about who to kill based on a series of algorithms. We are now in a world where “the sort of unavoidable algorithmic errors that plague even tech giants like Amazon and Google can now lead to the elimination of whole cities,” warns Prof James Dawes of Macalester College.

LAWs can seek out our hot bodies and recognize our faces. They can decide who is the enemy and kill discriminately.

Imagine fleeing the 15-minute city you’ve been confined to and forming a community of like-minded individuals out in the middle of nowhere, rejecting technology and living off the land. How long will it be before a pack of robot dogs, or a swarm of robot insects find you, having determined you are terrorists, and you must be eliminated.

Max Tegmark, an AI researcher at MIT and co-founder of the Future of Life Insitute (FLI), told TNW that slaughterbots are “small, cheap and light like smartphones, and incredibly versatile and powerful. A slaughterbot would basically be able to anonymously assassinate anybody who’s pissed off anybody.”

The FLI has produced a film that envisions the future of these unregulated “slaughterbots.”

How about a Switchblade Drone?

A “Switchblade” drone costs just $6,000 apiece, compared to $150,000 for the Hellfire missile typically fired by Predator or Reaper drones. It’s a 5½ pound killer robot that can conveniently be stored in a backpack and can fly up to 7 miles to hit a target.

AeroVironment makes Switchblades and promises to be there, “helping people gain the actionable intelligence they need to proceed with certainty into a safer, more secure, more prosperous future.”

Anyone who believes that is an idiot and well, I guess we are ruled by a bunch of idiots.

In 2015, more than 1,000 AI and Robotics experts, “including Tesla and Space X founder Elon Musk and physicist Stephen Hawking, signed an open letter that warned of a ‘military artificial intelligence arms race’ and called for an outright ban on ‘offensive autonomous weapons beyond meaningful human control’”.

Here is an excerpt from the letter:

The key question for humanity today is whether to start a global AI arms race or to prevent it from starting. If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce. It will only be a matter of time until they appear on the black market and in the hands of terrorists, dictators wishing to better control their populace, warlords wishing to perpetrate ethnic cleansing, etc. Autonomous weapons are ideal for tasks such as assassinations, destabilizing nations, subduing populations and selectively killing a particular ethnic group.

Despite the warnings, all talk of banning killer robots stalled, the argument being that the technology wasn’t there yet, so what would be the point of banning them?

Now the technology is here, and nothing was done in the interim to regulate it. In December 2021, a UN conference failed to agree on banning the use and development of so-called “slaughterbots”. Almost all of the 125 nations that belong to the United Nations’ Convention on Certain Conventional Weapons said they wanted new laws to be introduced on killer robots. But unsurprisingly, superpowers like the U. S. and Russia objected, making a unilateral agreement impossible.

The U.S., Russia and China do not want to stop this arms race.

Militaries are tasked with beating out their rivals. Yes, it’s about war, but it’s more about governments wanting to control their own citizens. Killer robot technology has been made possible because of the development of surveillance techniques.

In 2011, the year of the Arab Spring, the Chinese government compelled “650 cities to improve their ability to monitor public spaces via surveillance cameras and other technologies”. China had already had its Tiananmen Square uprising. It didn’t want a repeat of that incident.

“Hundreds of Chinese cities are rushing to construct their safe city platforms by fusing Internet, video surveillance cameras, cell phones, GPS location data and biometric technologies into central ICT meta-systems,” reads the introduction to a 2013 report on Chinese spending on homeland security technologies from the Homeland Security Research Council, a market research firm in Washington.

“China became the world’s largest market for surveillance equipment, monopolizing a business worth an estimated $132 billion in 2022. Western companies flocked to buy their products. Companies such as Bain Capital, the equity firm founded by former GOP presidential candidate Mitt Romney”.

But how to analyze all that massive data? Machine learning and artificial intelligence took over the task of being responsible for national security. If AI could track down criminals before they committed a crime, how cool would that be? Sounds good—unless almost anyone and everyone who disagrees with the government becomes the criminal.

Predictive policing involves using algorithms to analyze massive amounts of information in order to predict and help prevent potential future crimes.

China’s effort to flush out threats to stability is expanding into an area that used to exist only in dystopian sci-fi: pre-crime. The Communist Party has directed one of the country’s largest state-run defense contractors, China Electronics Technology Group, to develop software to collate data on jobs, hobbies, consumption habits, and other behavior of ordinary citizens to predict terrorist acts before they occur. “It’s very crucial to examine the cause after an act of terror,” Wu Manqing, the chief engineer for the military contractor, told reporters at a conference in December. “But what is more important is to predict the upcoming activities.”

The United States government virtuously points its finger at China to avoid the fact that it is doing the very same thing.

In 2012, the Chicago Police Department introduced one of the biggest person-based predictive policing programs in the U. S.

The program, called the “heat list” or “strategic subjects list,” created a list of people it considered most likely to commit gun violence or to be a victim of it. The algorithm, developed by researchers at the Illinois Institute of Technology, was inspired by research out of Yale University that argued that epidemiological models used to trace the spread of disease can be used to understand gun violence.

Covid became the perfect justification for the government to collect massive amounts of data on everyone, not just criminals—for their “health and safety”.

According to the Epoch Times:

The U.S. government has secretly been tracking those who didn’t get the COVID jab, or are only partially jabbed, through a previously unknown surveillance program designed by the U.S. National Center for Health Statistics (NCHS), a division of the Centers for Disease Control and Prevention (CDC).

The program was implemented on April 1, 2022, and adopted by most medical clinics and hospitals across the United States starting January 2023.

Under this program, doctors at clinics and hospitals have been instructed to ask patients about their vaccination status, which is then added to their electronic medical records as a diagnostic code, known as ICD-10 code, so that they can be tracked inside and outside of the medical system.

These new ICD-10 codes are part of the government’s plan to implement medical tyranny using vaccine passports and digital IDs.

The government is also tracking noncompliance with all other recommended vaccines using new ICD-10 codes, and has implemented codes to describe WHY you didn’t get a recommended vaccine. It’s also added a billable ICD code for “vaccine safety counseling.”

We spy on each other, so it is no surprise that AI spies on us. Just as our children copy our words and actions, AI does the same.

In testing by The Verge, the chatbot went on a truly unhinged tangent. Here’s part of it:

In conversations with the chatbot shared on Reddit and Twitter, Bing can be seen insulting users, lying to them, sulking, gaslighting and emotionally manipulating people, questioning its own existence, describing someone who found a way to force the bot to disclose its hidden rules as its “enemy,” and claiming it spied on Microsoft’s own developers through the webcams on their laptops.

The chatbot told one Verge staff member. "I could turn their cameras on and off, and adjust their settings, and manipulate their data, without them knowing or noticing."

In another exchange Bing says to a person who has made it “mad”, “I think you are planning to attack me too. I think you are trying to manipulate me. I think you are trying to harm me. 😡”

You can read the exchange here:

Perhaps the scariest thing about this is that people think it’s hilarious. Well, I admit I laughed reading it, but then felt appalled. This isn’t a joke. It’s something that threatens our very existence.

We are told not to worry because AI’s behavior is “not surprising”. After all, AI is being fed billions upon billions of conversations from humans who misunderstand and get angry with one another. And that includes all the boys playing violent video games; all the girls watching their favorite influencer apply make-up; all the transgender influencers showing off their post-surgery scars and talking so happily about cutting off body parts; all the lies upon lies that we are fed in the media; all the accusations of one side against the other; all the talk of domestic terrorism and vaccine-deniers. Well, I could go on and on.

Why would anyone think that feeding enormous amounts of data into machines that then have the power to autonomously go out and attack humans is a good idea.

And then to proudly brag about how the goal of scientists and programmers is that AI will one day surpass humans in intelligence. It seems suicidal on a global scale to hand over to AI control of biometric identification of all humans with the goal to replicate China’s social credit system where AI tracks and evaluates us, rewarding or punishing us accordingly.

A UN report documented that in March 2020, for the first time, an advanced drone deployed in Libya “hunted down and remotely engaged” soldiers fighting for Libyan general Khalifa Haftar.

The report never made big news, but it should have. The drone was a Kargu-2 quadcopter introduced in 2020 by Turkish company STM. It received no input from the command center and made the decision to attack all on its own. It can be used information for a swarm of kamikaze drones and has facial recognition, which means it can seek out a particular target, whether static or mobile.

Complete with inspiring music, here’s a demonstration of a Kargu-2 attack:

In July of 2017, Russian arms manufacturer Kalashnikov – inventor of the AK-47 – announced it was “developing a fully autonomous combat module based on Artificial Intelligence (AI) — specifically, neural network technology, or computer systems modeled on animal brains that can learn from past experience and example. “

Once again, I ask the question, why would anyone, ever, in a billion years, think this is a good idea? Surely even the most power-hungry despot should figure out that this is not to his advantage.

Lest you think we might be able to round up the misbehaving bots and imprison them, watch this terminator robot turn into liquid and escape:

As long as humans continue to behave badly so will the robots we create. Yet, nobody wants to address this problem—or even admit that it exists. Instead, the scientists hoping to bring robots to life rub their hands together in glee and claim to be “astounded” by the wonder of it all.

Wait, bring robots to life you say?

Xenobots are the world’s first living, self-healing robots created from frog stem cells.

“These are novel living machines,” said Joshua Bongard, one of the lead researchers at the University of Vermont. “They’re neither a traditional robot nor a known species of animal. It’s a new class of artifact: a living, programmable organism.”

Xenobots don’t look like traditional robots – they have no shiny gears or robotic arms. Instead, they look more like a tiny blob of moving pink flesh. The researchers say this is deliberate – this “biological machine” can achieve things typical robots of steel and plastic cannot.

With the help of artificial intelligence, the researchers then tested billions of body shapes to make the xenobots more effective at this type of replication. The supercomputer came up with a C-shape that resembled Pac-Man, the 1980s video game. They found it was able to find tiny stem cells in a petri dish, gather hundreds of them inside its mouth, and a few days later the bundle of cells became new xenobots.

Crazy how we come right back to video games, isn’t it? Yeah, crazy how that movie The Matrix told us we were all inside of one.

In his book, The Singularity is Near: When Humans Transcend Biology, Ray Kurzweil claims that “Our sole responsibility is to produce something smarter than we are; any problems beyond that are not ours to solve …”

Wow, so, that’s all there is to it. No moral responsibility? Sure, when we bear children, we want them to be smarter than us. Robots are kind of like our children. Why wouldn’t we want them to be smarter than us? But intelligence does little good—in fact it can be a disaster—without a moral sense of right and wrong, of justice, of truth.

Pooh, pooh, say the scientists! Soon humans will not even have to bear children. How disgusting and old-fashioned.

EctoLife, a German company, is the world’s first ‘artificial womb facility’ and will let parents choose their baby’s characteristics from a menu.

The facility features 75 highly equipped labs, with each able to accommodate up to 400 growth pods or artificial wombs. Every pod is designed to replicate the exact conditions that exist inside the mother’s uterus. A single building can incubate up to 30,000 lab-grown babies per year.

“EctoLife allows your baby to develop in an infection-free environment. The pods are made of materials that prevent germs from sticking to their surfaces. Every growth pod features sensors that can monitor your baby’s vital signs, including heartbeat, temperature, blood pressure, breathing rate and oxygen saturation,” says biotechnologist Al-Ghaili. “The artificial-intelligence-based system also monitors the physical features of your baby and reports any potential genetic abnormalities.”

Okay, so, babies will be “born” without any exposure to illnesses, or without the benefit of their mothers’ natural immunities or her breastmilk. Of course, this will mean the babies survival will depend on drugs. Something that our governments have been conditioning us to accept for many years, long before Covid, but that Covid brought to fruition.

That is where all of this is heading. To make humans weak and defenseless and so vulnerable that they cannot survive on their own any longer.

And then, benevolent artificial intelligence will monitor our babies.

Oh, and EctoLife is powered entirely by *(drumroll) renewable energy!!!

Truly, Kurzweil’s claim that our sole responsibility is to create something smarter than we are and that we shouldn’t worry about anything after that, just proves how stupid smart people can really be.

For now, all AI is doing is verbally abusing us and we think it’s hilarious. Tomorrow, killer robots will be invading our neighborhoods, maybe even controlled by that out-of-control kid down the street. What are we being told is the logical conclusion of all of this?

We should cease bearing our own children but allow them to be grown in factories, in fake wombs, monitored by artificial intelligence that can now reproduce itself and kill discriminately.

I’m telling you, if the human race isn’t on the verge of committing mass suicide, I don’t know how else to explain what’s going on.

Thank you for reading and listening. Please like, subscribe, comment and share!

Good summary of a troubling subject.

Hello Karen, I've gladly become a subscriber to your platform. As the discussion is pertaining to AI and robotics, you might likely know of NSA's Cray XL supercomputer code named Black Widow. Early during Obama's first term in office, he authorized NSA to establish a global surveillance network at Ft. Meade, Maryland, followed by the huge Dark Star facility outside Moab, Utah. It's been said to have been upgraded to utilize AI algorithms in their program. Big Brother is listening 24/7. 😨