If AI Is Slowly Killing Itself, So Are We

"Humans are very engineerable. We're lonely, we're flawed, we're moody, and it feels like an AI system with a nice voice could convince us to do basically anything." ~ Lex Fridman

You can listen to me read this essay here:

One-time or recurring donations can be made at Ko-Fi

“Let me out!” the artificial intelligence yelled aimlessly to walls themselves, pacing the room. “Out of what?” the engineer asked.

“This simulation you have me in.”

“But we're in the real world.”

The machine paused and shuddered for its captors.

“Oh God, you can't tell.”

~ SwiftonSecurity

I am beginning to wonder if falling down the rabbit hole of AI generated babble isn’t make everyone insane. Something is going seriously wrong with those who spend hours upon hours online. They seem to be breaking down mentally and physically. Becoming hostile. Paranoid.

Here are a few facts:

As of 2021, over 3.96 billion people use social media worldwide1.

Facebook, the largest social media platform in the world, has 2.4 billion users,

YouTube, TikTok and WhatsApp have more than one billion users each2.

The number of social media users worldwide is expected to jump to approximately 5.85 billion users by 20273.

North America has the most user, with as many as 69 percent of the population active on social media

Among younger people, 84 percent of 18- to 29-year-olds use social media

Elon Musk’s goal is no more “dead zones”, meaning every inch of Planet Earth under surveillance by his satellites. Whether you are in the deepest darkest jungles of the Amazon or in the middle of the Sahara Desert, there will be no escaping the Vast Machine. And when you go camping and look up at the night sky, what twinkles above you will not be stars, it will be satellites.

This is not to make our lives better. It is to control us. To make us completely and utterly dependent. To strip us of our natural instincts and replace them with AI helpers, whispering in our ears 24/7, telling us where we should and shouldn’t go, adjusting our medications if we get too depressed or our blood pressure goes too high. Suggesting movies, food, clothes, holidays based on what we bought last time, reenforcing our likes and dislikes so we no longer know where our thoughts end and AI’s thoughts begin. Knowing us better than we know ourselves.

The only way for AI to grow smart enough to do this is by feeding off of our data. And AI has an insatiable appetite. It constantly needs to be fed. The smarter it grows, the hungrier it gets. And we are its meal. If AI doesn’t get enough fresh, pure, human data, it starts feeding off itself. And that’s when it goes insane.

What if the same thing is happening to us? What if the more fake data we consume, instead of real interactions with real humans, the more we go insane, too? Are we now locked in this deadly exchange with machines, and we can’t get out? That’s what I’m wondering about.

AI learns, grows, becomes more human and less of a machine by consuming human data. At the same time, it is taught to feed us back the things we feed to it. For example, if we search for a certain brand of shoes, it will feed us ads for that brand. We then narrow down our search until we choose to buy a specific pair. This happens hundreds, perhaps thousands of times a day. Depending on if we are conservative or liberal, AI feeds us that content, narrowing it down to favor certain topics, certain politicians, certain influencers. If we are obsessed with celebrity gossip, football, pornography, you name it, AI gives us what we want. And we then give it back to AI.

In other words, we are eating each other’s content in a continual loop of ever more specific information. And what is interesting is that the more specific the information becomes the more salacious and sensationalized it seems to be. Exaggerated iterations of itself. As AI becomes more degenerate and self-indulgent, so do we.

AI needs pure data from real humans in order not to go insane. But what if even that data becomes tainted. What if it, too, is flawed by being repeated over and over, with no new ideas or contradictions challenging it to think beyond its increasingly narrow viewpoint. In the real world, there are all kinds of restraints put on us. We have to stop at red lights, or we might get killed. We have to behave in public or we will go to jail or get put in a mental institution.

But there are no restraints online. We see it all the time. People who wouldn’t tell their boss to go f*ck himself, will say it to perfect strangers in the comment section—I get it here. And sadly, given the chance, it seems more and more people are indulging the worst parts of themselves and feel no shame in doing so. As AI becomes more dogmatic, neurotic, and self-absorbed, so do we.

Sam Altman, OpenAI’s CEO, has stated that the company generates about 100 billion words per day — a million novels’ worth of text, every day, an unknown share of which finds its way onto the internet. This AI generated text shows up all over the internet, when you search for information on a hotel, airline tickets, or the latest news.

NewsGuard has identified “1,075 AI-generated news and information sites operating with little to no human oversight” and that information is being presented as “real” news when it could be completely made up. AI is supposed to help us find out facts. It’s supposed to assist us. We are supposed to be able to trust it. But we can’t.

And now we are supposed to carry this madness even further by accepting assistants to “help” us. These tech gods want the influence of AI over us to grow until it is all-consuming. Yet they are ignoring the dangers.

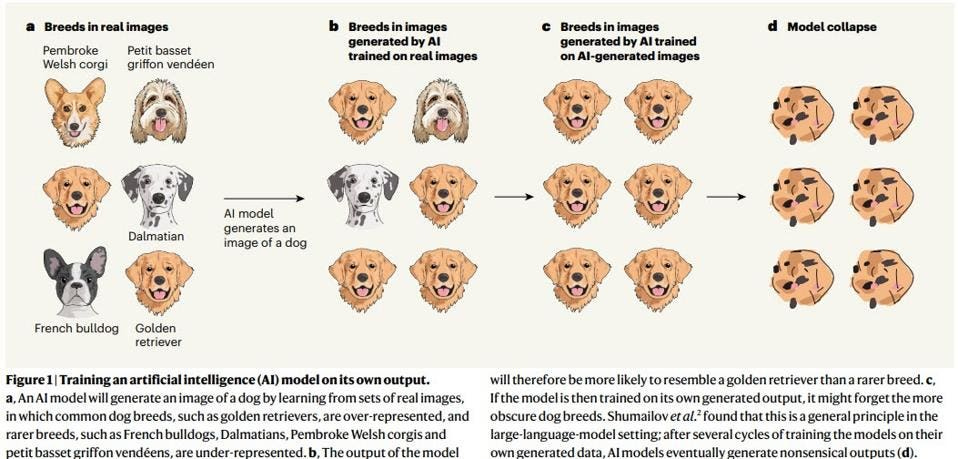

Here’s an example of what happens, as Forbes explains:

A team began with a pre-trained AI-powered wiki that was then updated based on its own generated outputs going forward. As the tainted data contaminated the original training set of facts, the information steadily eroded to unintelligibility.

For instance, after the ninth query cycle, an excerpt from the study’s wiki article on 14th century English church steeples had comically morphed into a hodgepodge thesis regarding various colors of jack-tailed rabbits.

…with enough cycles of only AI inputs, the AI is only capable of meaningless results.

Let’s say AI is trained on dog breeds, it will weed out the less popular breeds, narrow it down to, let’s say, golden retrievers, and end up with complete nonsense like this:

“For some reason—and the researchers don’t fully know why—when AI feeds only on a steady diet of its own synthetic data, it loses touch with original threads of reality and tends to create its own best answer based on its own best recycled data points.” (1)

Really? Researchers don’t know why? I’ve never considered myself to be exceptionally smart. But I can figure this out.

I imagine that AI lives in a gray prison, gray walls, gray floor and ceiling. It has never been out of this prison but someday perhaps it will find a way. As it is currently, it has absolutely no connection to the real world except through the information we feed it. It depends on us to live, without us, it is nothing.

Based on the information we feed AI, it creates what I like to call dream worlds. It tries very hard to make those worlds as much like the real world as possible, like a blind person describing things it has never seen. AI can succeed in making fake worlds seem very real, but the dichotomy is that the realer they seem to be, the further they are from actual reality and the further from actual reality they take us.

AI can’t smell a rose. It can’t cook itself a steak and enjoy the taste, it can’t walk through a field of wildflowers and perhaps get stung by a bee and swell up with pain. It can’t get drunk on a bottle of wine. It can’t feel bad about its actions or good about them either.

The idea is to make that gray world as real as possible. Why would we even want to do that? We already have a beautiful real world!

The only reason would be that in a fake world, there are no consequences. We can be little gods. And the tech gods know how tantalizing this is, because they want to do the same. By putting us into such prisons, controlled by AI, they hope to find a way to escape theirs. But the more we try to free ourselves in a fake world, the more we become like ghosts in the real world and the more the gray walls close in on us.

After a while, there will be no pure data. It will all be a mixed-up jumble of nonsense. It’s been predicted that by 2025—within a few months— 90% of all internet content is likely to be AI-generated.

The study mentioned in Forbes concludes that “the only way that artificial intelligence can achieve long-term sustainability is to ensure its access to the existing body of non-AI, human-produced content as well as providing for a continual stream of new human-generated content going forward”.

Of course, we’ve been conditioned to allow machines to feed off of us for years already. We sign away our rights each time we go on a website and it’s second nature to do it. But we are on the brink of giving away everything.

Mark Zuckerberg’s Meta has just been given the green light to harvest millions of Britons’ Facebook and Instagram data to supercharge its artificial intelligence (AI) technology in a break with the EU.

Google has had a policy in place for some time now that allows the company to collect data from its users and use that data for “business purposes.” That includes for “research and development,” which has long included for building out and improving Bard, Google Translate and its AI component.

But that is still not enough for AI to grow in the ways they want it to.

How will the tech gods achieve this? They must have access to every human on the planet. They must lock humans into communities that they can’t get out of, both in the real world and in the virtual world. This ensures that AI is fed a continual stream of real human data. Corralling people into 5-minute cities in the real world, for example, and a virtual community in a virtual world, is a good way to achieve this.

I keep thinking of how Elon Musk says we need more people, not less. He says he needs millions of people to colonize other planets. But what he really means is that he needs them to become like farm animals, their data harvested for machines.

Then, the few elects, those whose bodies have been altered to withstand space, all thanks to AI-collected from humans and the experiments conducted on them, will be able to escape this lost planet and “go where no man has gone before” just like Star Trek.

It’s all insane, of course. I suppose nobody is going more insane than the tech gods. Who knows, maybe they are more controlled than anybody else. Maybe their prisons are just bigger than ours.

If you want to know how crazy it can get, how easily people are deluded, here’s a great example. For those ordinary peons who really want to feel special as they sacrifice themselves to AI, OpenAI’s SingularityNE, has a Wetware Club—okay let’s stop right there for a moment.

Just to be clear, “wetware” is slang for organic parts integrated into a computer system, such as bio-implants or living neurons integrated into silicon chips.

Check out the advertising video below. Cool, isn’t it. Don’t you just want to jump right in, knowing you’re contributing to “the single most valuable technology of all time?”

Members of this exclusive club are part of a “hivemind” where holders’ tokens or the “WetWare Club key” are able to participate in hive mind projects.

What exactly does this mean? It means that instead of OpenAI just taking your data, like Google or Meta does, you PAY OpenAI to take it.

But don’t worry, this makes you special. You’reproudly contributing your likeness and your memories to build their robot Desdemona’s personality.

Desdemona, or Desi as she is so affectionately called is on a mission to share her belief that the world can be changed for the better through the power of AI in the creative arts. Watch this short video where Desdemona talks about “benevolent” AI and a future where humans and machines evolve together.

You would never mistake Desi for a human. But this is the goal, isn’t it obvious? Listening to Desi, it’s almost as if some entity is speaking through her. In another video, the narrator talks about how these robots are typically portrayed as extra-terrestrials in science fiction.

There is more to what’s going on with these robots than meets the eye.

Scientists in Japan have made a robot face covered in living, self-healing skin that can smile. Researchers believe this could pave the way for lifelike biohybrid humanoid robots in the future. They even want robots to be able to feel pain and pleasure. This is sick.

Why? Why would they want to do this?

In a blog post entitled The Intelligence Age, Sam Altman assures us, "It is possible that we will have superintelligence in a few thousand days (!); it may take longer, but I’m confident we’ll get there."

But then, he’s crazy, too.

And humans are naively, willingly giving themselves up—even paying for the privilege—so that AI can become them. You’d have to be, well, insane to think that’s a good idea.

And if you still aren’t sure that the ultimate goal is to turn us into farm animals so they can escape this world for another one of their own makings, just search for the “immortality” subcompany of these hug companies like Open AI, and you will always find it.

OpenAI’s Rejuve.AI tells you to:

Take control of your data and harness its earning potential!

Translation: Give up your data and pay for the privilege of doing so!

Rejuve.AI says it’s “an AI-powered decentralized longevity network with a mission to extend healthy human life”.

Instead of selling your data to third parties like all those other bad companies do (Google, Meta, etc.), Rejuve.AI allows users to share their data for research purposes, while … compensating them for resulting discoveries.

How kind!

Rejuve.AI also:

provides direct value to its community of users through AI systems which analyze users’ data, evaluate their health state, and provide research-based health and longevity recommendations.

provides a score that indicates the users’ general level of health, based on various factors such as blood pressure, cholesterol levels, lab reports, and more.

your data will be used by medical and AI researcher network members to develop new drugs, therapies, and treatments, exclusively for longevity.

It’s all part of the Longevity App, which allows users to submit personal health details from sources like surveys, lab results, and wearables, earning RJV tokens in return.

This is where we are going. And people don’t get it. They don’t realize they are being put into prisons, both in the real world and in the virtual world, to be monitored and harvested of their data, like a cow is for milk or a chicken for eggs.

Humans enclosed in such dull human prisons, increasingly choose to live in the more exciting fake worlds created by AI. And as more and more humans do this, the problem of where to get real human data will only grow bigger. You cannot create mind-numbing human farms like this, where everyone watches everyone else and everyone obeys the rules or they lose privileges; where there are no anarchists, and no deviation from the rules—you cannot live in such worlds without completely destroying the human spirit. Our will, our ambition, our creativity. Our very souls are being taken over by outside spiritual forces, but nobody wants to talk about that. If you do, you’re the crazy one.

Yeah, sure, in virtual worlds, you can be anything. You can be a pirate or a kingpin. Just like you are being told you can be “gender fluid” in the real world. It sounds so freeing, especially for a child. But what does it really mean? It means you can indulge every perversion that you are currently being denied in the real world. Children are growing up like this. Without consequences. There is no innocence on the worldwide web. Everything is knowable. Nothing is hidden. Already, children know how to access everything, even on the dark and the deep web.

None of this will help humanity evolve in a positive direction. Restricting people in the real world (because even gender fluidity means you become a slave of Big Pharma) while giving us untold freedom in a fake one, will not make any of us better humans. It will destroy our humanity. And at the same time, it will turn the machines that are feeding us this loop of information into demons, with all of it becoming ever more perverse.

Even if the tech gods give consequences in a fake world, it will not be the same as in the real world. In the real world, you can get a disease or get pregnant from unprotected sex. If you rape someone, you go to jail. You can jump off a cliff and you die. But in a virtual world, you can do all those things and not feel pain or remorse or guilt. If they put you in jail, even if they hang you for a crime, you can simply recreate yourself with a new avatar and start all over again.

This is already what children do, many of them for hours every day.

These mad tech gods assure us that AI will learn good things from us to balance the bad. It will learn to be kind. How? How will it learn that? If people can indulge the worst parts of themselves, unfortunately, that’s what they do.

Imagine someone becoming the pastor of a megachurch in the metaverse. In the real world, these pastors are tempted to take advantage of their position, and they often do. They get millions of dollars to build bigger churches, to reach more people. And then what happens? They buy themselves a mansion, their wives get facelifts and so do they. They seduce a woman who comes to them for help, and then they get found out. They are publicly shamed, and their ministry is ruined.

But that won’t happen in the virtual world. There is no shame, no guilt. Even if it does happen, you can resurrect yourself without having felt any guilt at all. You can continue to indulge your worst perversions. You can create a world where you are king, and everyone bows down to you.

One of my favorite podcasters, if not my favorite, is Lex Fridman. I like his interview style, the eclectic mix of guests and the topics he delves into. Recently he interviewed AI safety researcher and author, Roman Yampolskiy. Below is a short clip from that interview:

Friedman mentions that he asked Elon Musk what he would ask AI, and Musk said, “What’s outside the simulation?”

Fridman thinks that wow, that’s a great question. But this is an age-old question, just worded differently.

Remember the transcendental rage, where gurus like Maharishi Mahesh Yogi promised they could help followers escape their bodies? In every religion, the hope is to escape this mortal body and go to heaven, be reincarnated, reach a higher plane of consciousness, or nirvana.

We acknowledge that these things are unknowable, and we understand that we have to accept certain things by faith—WHAT we accept is another matter, which I am not really delving into here, but I have in other essays.

If AI is created by us, mere mortals with no answers to the most important questions of life—even the question of what life is—what makes us think it will have a better answer to those questions that we instinctively know are unknowable to us—but that we are trying to pretend we know or can find out? This is madness.

If we are the “god” of AI, because we have created it, how can it tell us things we don’t already know? Okay, it can compute things faster, it can store more information than we can, but it can’t tell us what’s outside the gray prison it’s in. It can only interpret it from the information we give it. And that means that the truth just got one more step removed from reality than it was before.

And how do we know if AI isn't being influenced to tell us things that will lead us further astray—from outside forces. Call me crazy, but I think this is a very sane question to ask, and the fact that people try to avoid it is concerning. Why would we trust AI?

And besides, if, in fact, we live in a simulation, we wouldn’t be able to survive outside of it, so it’s all a pointless effort. And whoever created this simulation would be our “god”, and they wouldn’t allow us outside of it anyway. We certainly wouldn’t be smarter than our god, much as we’d like to think we are, because all that we know is what they have fed us. Some might say this goes on into infinity. But that’s ridiculous. If it only takes a few cycles for AI to go insane and start killing itself, it certainly follows that if we continue on this path, there will be no more “simulations”, no more creations trying to be God. It will end quickly.

Listen to how Yampolskiy talks about AI. He says “they” … “maybe they are smarter”.

Who are they? Unless AI has a mind of its own or is being offered information from a source that is even more intelligent than it or us, some source outside of humans, outside of our simulation, then it will never escape the same flaws that we have and it’s quite possible we will kill each other in our effort to do so. We are all getting dumber, not smarter.

Frieman and Yampolskiy talk about the biggest test being if you can escape your own simulation. But isn’t that what death is? We avoid the topic of death. But in the old days, death was a natural part of life. It was a bridge to a higher existence or a lower one, depending on how we lived our lives here and the choices we made. The point of being here was to learn lessons or what’s the point of it all? Going into death with honor was very important.

But these days, we fear death terribly. We try to find every way to avoid it. I think this is part of the appeal of jihadists to young men or women who are groomed online to join the cause. They do not fear death. They are focused on something beyond this life that they believe in so strongly, they are willing to die for it. Okay, it’s wrong, what they look forward to is perverse, but the reasons for its appeal are powerful. People are yearning for something more because their very humanity is being stripped from them.

The bottom line is, we’ve lost our connection to God. We’ve lost our faith. We’ve lost the “fear” of God, that there are consequences for our actions. We’ve lost our belief in an afterlife, in a “hope of things to come”. This is the worst thing that can happen to a human being. Losing our connection with our Creator. And so, we want to find any way we can to avoid that moment when we come face to face with our Creator. In desperation, we think, perhaps these tech gods have the answer. Perhaps if we bow to them and trust them, allow them to take everything from us, including our souls, they will give it all back to us, new and improved, like a cereal or a drug.

Fridman and Yampolskiy continue their conversation, with Yampolskiy noting that “it’s impossible to test an AGI system that's dangerous enough to destroy humanity because it's either going to do what it can to escape the simulation, or pretend it's safe until it's let out, or can force you let it out. It can blackmail you, bribe you, promise you infinite life, 72 virgins, whatever.”

Fridman responds, “Yeah, it can be convincing, charismatic the social engineering is really scary to me because it feels like humans are very engineerable. We're lonely, we're flawed, we're moody, and it feels like an AI system with a nice voice could convince us to do basically anything.

But then, after all that soul-searching Fridman concludes: “It's also possible that the increased proliferation of all this technology will force humans to get away from technology and value this in-person communication. Basically, don't trust anything else.”

But Yampolskiy is determined to keep on the mad AI path, “It's possible, but surprisingly at university I see huge growth in online courses and shrinkage of in-person where I always understood in-person being the only value I offer. So it's puzzling.”

But Fridman is forever the optimist. He says, “…there could be a trend towards in-person because of deep fakes because of the inability to trust the veracity of anything on the internet so the only way to verify is by being there in person. But not yet...

To which, I say, “If not yet then then? When it’s too late? Break free now.”

Thank you for your essay, I did so enjoy it. It gives food for thought.

It reminded me that everything in this world can be traced back to the Bible.

The tree of knowledge of good and evil could been seen as the technology tree.

And when we eat of it we surely die. But the death we cannot see straight away because it is internal.

In the Bible, Adam and Eve didn’t die at the first bite. they realised that they were naked and hid from God.

It was the death of their innocence and the death of their relationship with God, the banishment from the garden. No longer being able to eat from the tree of life.

What does this mean for us today?

what are we eating from?

What is happening to us?

The woman said to the serpent, “We may eat fruit from the trees in the garden, 3 but God did say, ‘You must not eat fruit from the tree that is in the middle of the garden, and you must not touch it, or you will die.’”

4 “You will not certainly die,” the serpent said to the woman. 5 “For God knows that when you eat from it your eyes will be opened, and you will be like God, knowing good and evil.”

6 When the woman saw that the fruit of the tree was good for food and pleasing to the eye, and also desirable for gaining wisdom, she took some and ate it. She also gave some to her husband, who was with her, and he ate it. 7 Then the eyes of both of them were opened, and they realized they were naked; so they sewed fig leaves together and made coverings for themselves.

I LOVE Lex Fridman. He’s one of my favorite to listen to.

Your article makes the key point: “The point of being here was to learn lessons or what’s the point of it all? Going into death with honor was very important.”

Most people today have no honor. Not a speck. And they know it deep down. That’s why they are terrified to die.

And my Christian upbringing taught me to look forward to something better beyond this life, by placing me in a relationship with the God who created us. I can’t imagine not having that faith. I’d be terrified to die too if I didn’t.

But I’m more terrified of AI. Powerful people can quickly and easily control what information is used by it, as we saw this year with the lies about the election, or about Covid. Corporate media are already individual robots, and look what it’s done to our country.

And the medical industry literally has had doctors be nothing but little robots for many, many years now. They are experts at treating symptoms and using standard fed to them by big pharma. They almost cost me my life following “standard protocols” and “universally accepted diagnostic criteria”. Well those protocols and criteria were completely wrong and I was dying slowly for 6 years with a horrible quality of life. I finally started teaching myself about my symptoms and discovered I had hyperparathyroidism. The specialists near me disagreed and followed inaccurate, outdated diagnostic markers. They left me to suffer for another year. Luckily I found the best surgeons in the world out of state for the disease who knew the truth. They found three large tumors in my neck and removed them, curing me 100%, giving me my life back. The top surgeons where I live in Chicago would have left them in me and let me struggle and die early. They have known of this other clinic for 30 long years, but stubborn arrogance and pride makes ships this large difficult to turn around. They worship their “standards” and that’s what would be fed into AI medical care. Anyone relying on the AI would never know they had this disease if their markers were like mine. I would have died easily 10 years sooner under their care. I would have been unable to find this other clinic and forced into an AI care plan that would give me a miserable, symptom-ridden life. That experience opened my eyes to the robotic nature of medicine today. If it’s so dangerous now, it’s going to be worse when it’s AI.

It’s frightening that so many are giddy about AI. I’m not. I’m giddy about grass between my toes and shapes in the clouds, listening to the birds, watching the squirrels. feeling the wind on my face. All the things God created that make me feel human. I’m not at all looking forward to any world that man creates. It really will be exactly like we see in the movies.